Google's Gemini-Exp-1206 is Outperforming GPT-4o and O1

Google's Gemini-Exp-1206 is quickly making waves in the world of generative AI. Upon release in December 2024, it's already beating the performance of OpenAI gpt-4o, OpenAI o1, claude 3.5 Sonnet and Gemini 1.5 on LMArena.

In this blog, we will cover key features, performance benchmarks, real world applications of Google Gemini-Exp-1206, and what the hype is all about.

Understanding Gemini-Exp-1206

Gemini-Exp-1206 is the newest large language model (LLM) in Google’s experimental Gemini series, designed to be multilingual, handle multi-modal inputs like text, voice and images, and achieve top-tier performance across diverse AI tasks. As part of Google's larger strategy to integrate advanced machine learning models into real-world applications, Gemini-Exp-1206 has quickly captured attention for its capabilities across creative, technical, and conversational domains.

Despite being a prototype, Gemini-Exp-1206 has distinguished itself by excelling in challenging benchmarks. It represents the culmination of iterative improvements in the Gemini series, showcasing innovations in multi-tasking, contextual understanding, and creative problem-solving.

How can you access Gemini-Exp-1206?

Gemini-exp-1206 can be accessed in Google AI Studio, and the Gemini API. Developers use Helicone to monitor, debug and improve their LLM apps.

Key Performance Metrics

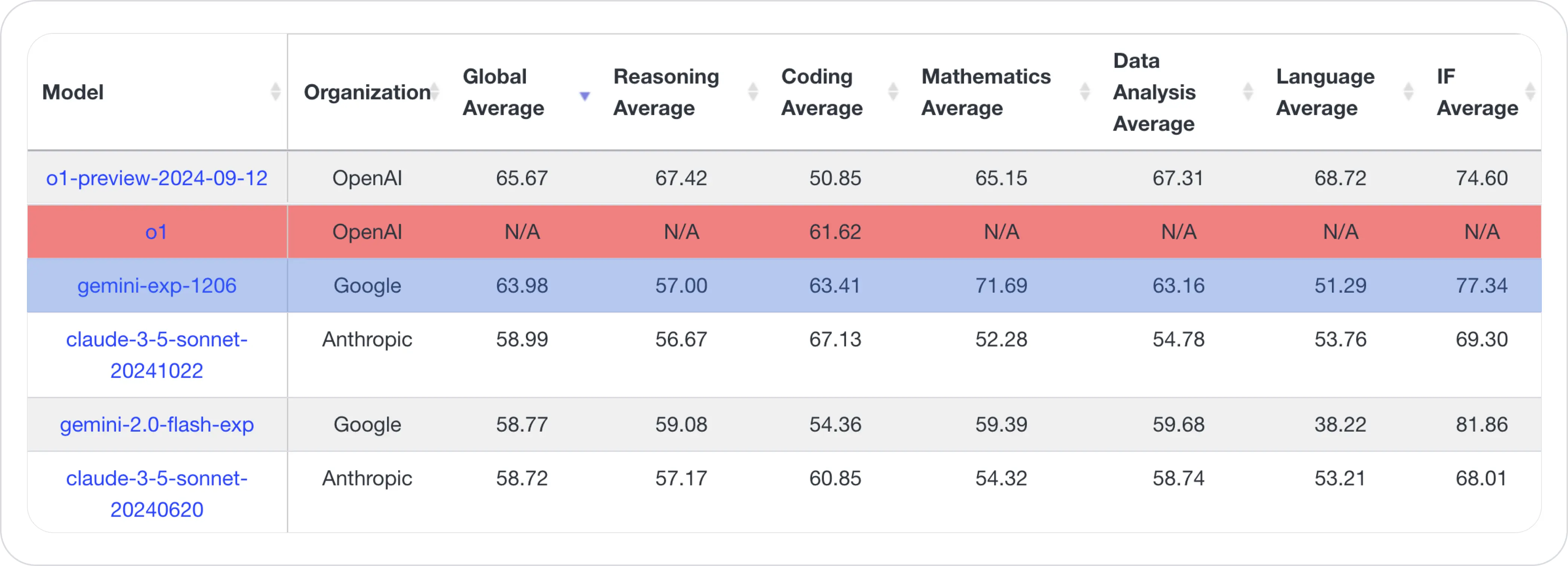

Gemini-exp-1206 has achieved top rankings on several AI leaderboards, including #1 overall on the Chatbot Arena leaderboard, #2 on coding average and #1 on Mathematical average on the Live Bench leaderboard.

1. Chatbot Arena Performance

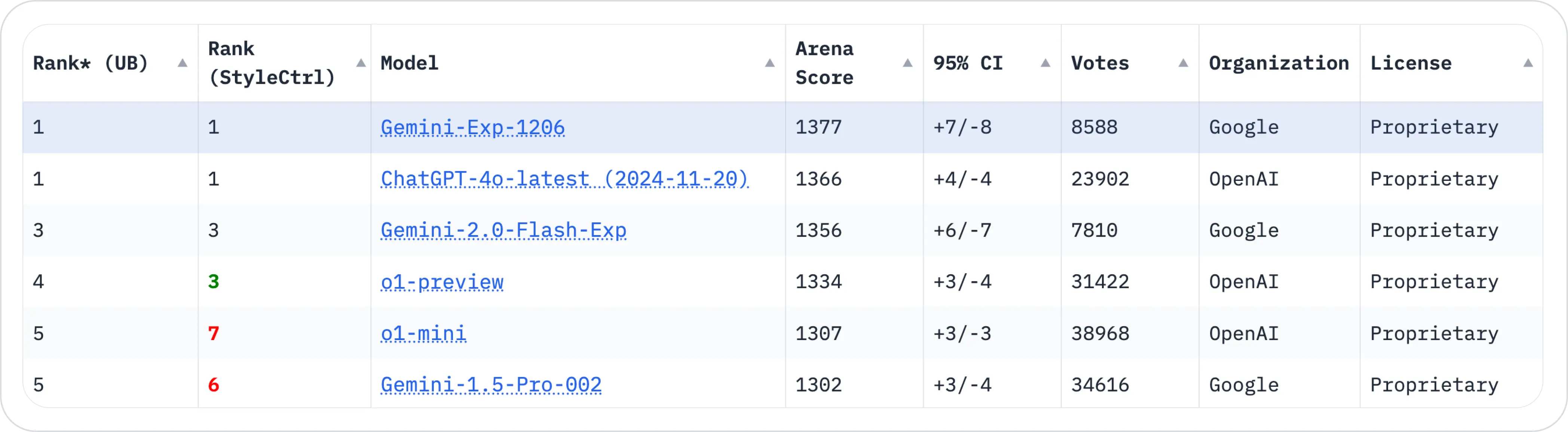

Gemini-Exp-1206 has demonstrated impressive performance by achieving an Arena score of 1377, surpassing ChatGPT-4o's score of 1366.

It excels at handling hard prompts, effectively managing complex queries that require nuanced and detailed responses. Moreover, Gemini-Exp-1206 is adept at generating style-controlled output, allowing it to adjust tone, structure, and content to meet specific user needs. Furthermore, it performs well in multi-turn dialogues, maintaining contextual awareness and memory to navigate sustained conversations with ease.

2. Domain-Specific Rankings

| Domain | Capabilities |

|---|---|

| Coding | Excels at offering optimized code suggestions and effective debugging solutions, streamlining development processes |

| Mathematics | Ability to solve complex and advanced problems with precision |

| Creative Writing | Shows remarkable originality and adaptability, producing content that is both engaging and inventive |

Additionally, it excels in instruction following, being able to deliver clear, concise, and step-by-step guidance. Compared to its predecessor Gemini-Exp-1114, the 1206 version shows a lower margin of error and higher reliability. However, it still lags behind ChatGPT-4 in terms of stability during extensive public testing.

What sets Gemini-Exp-1206 apart?

Massive Context Window

Gemini-exp-1206 has a 2,097,152 token context window, significantly larger than most publicly available LLMs. This allows the model to:

- Process and understand extremely long pieces of text

- Maintain context across extensive documents

- Handle large codebases more effectively

- Tackle complex reasoning tasks with a broader range of information

Advanced Alignment Techniques

Gemini-Exp-1206 benefits from enhanced reinforcement learning (RL) processes. This fine-tuning enables it to generate responses that align closely with user expectations while maintaining coherence across lengthy interactions.

Free Access

Google has made Gemini-exp-1206 freely available through Google AI Studio and the Gemini API. Some developers have turned away from gpt-o1 and are using gemini-exp-1206 instead of paying for the ChatGPT Pro subscription. This accessibility allows developers and researchers to experiment with and integrate cutting-edge AI capabilities without cost barriers.

Integrate your Gemini app in seconds ⚡️

Start monitoring your Gemini app with Helicone.

How Gemini-Exp-1206 Compares With Other Models

1. Gemini vs OpenAI’s GPT-4 and o1

Gemini-Exp-1206 surpasses GPT-4 in categories like hard prompts and creative tasks. However, GPT-4 leads in stability and widespread adoption, with over 21,000 user votes compared to Gemini’s 5,000 in evaluations such as the Chatbot Arena leaderboard. Developers reported that Gemini-exp-1206 is notably faster in generating responses compared to openAI o1 and has a significantly larger context window.

2. Gemini vs Meta’s Llama 3.3

Meta's Llama 3.3, the newest open-source model also released in December 2024, excels in cost-efficiency and inference speed. While impressive, Llama 3.3 70B ranks slightly lower as it performs similarly to GPT-4o on some benchmarks.

Gemini-Exp-1206 outshines it in advanced reasoning and task generalization. While Gemini-exp-1206 currently holds an edge in overall performance and multimodal capabilities, Llama 3.3 70B has impressive results for an open-source model, particularly as a cost-effective local deployment option.

-> Want to know how Gemini-Exp-1206 compares with other models? Check out our free model comparison tool.

Applications Across Industries

In software development, Gemini-Exp-1206’s advanced coding capabilities empower developers to streamline workflows, from automating repetitive tasks like generating boilerplate code to solving intricate debugging challenges.

In education and tutoring, the model’s precision in mathematics and ability to follow instructions make it an ideal tool for creating personalized, interactive learning experiences.

For content creation, its mastery of style control enables writers, marketers, and filmmakers to produce engaging, audience-specific material.

In research and analytics, Gemini-Exp-1206 excels at synthesizing complex data and delivering actionable insights, proving invaluable for researchers and decision-makers alike.

Challenges and Limitations

- As a developing model, it lacks comprehensive testing and reliability compared to established AI systems like GPT-4. The model may not be robust enough for enterprise-scale deployment or production-ready apps.

- Gemini-Exp-1206 remains an experimental prototype with limitations. With limited testing data and fewer user votes raise concerns about reliability across real-world scenarios.

- Continuous refinement and innovation will be crucial for success in the competitive generative AI landscape.

What’s next for Gemini?

With its tremendous potential for future developments, Gemini-Exp-1206 is a positive step forward for Google's generative AI initiatives. It is anticipated that stability improvements would resolve dependability concerns and prepare it for use in production settings.

Bottom line

Gemini-Exp-1206 is pushing the boundaries of performance, setting a high bar for competitors. Gemini-exp-1206 is likely an early version of future Gemini iterations, with more advanced versions on the horizon. It's important to note that all AI models comes with strengths and limitations. Depending on your use case, Gemini-Exp-1206 might be suitable for you.

Other models you might be interested in:

-

O1 and ChatGPT Pro — here's everything you need to know

-

GPT-5: release date, features & what to expect

-

Llama 3.3 just dropped — is it better than GPT-4 or Claude-Sonnet-3.5?

Questions or feedback?

Are the information out of date? Please raise an issue and we'd love to hear your insights!